Harmful Surveillance and Investigative Technologies

Harmful Surveillance and Investigative Technologies

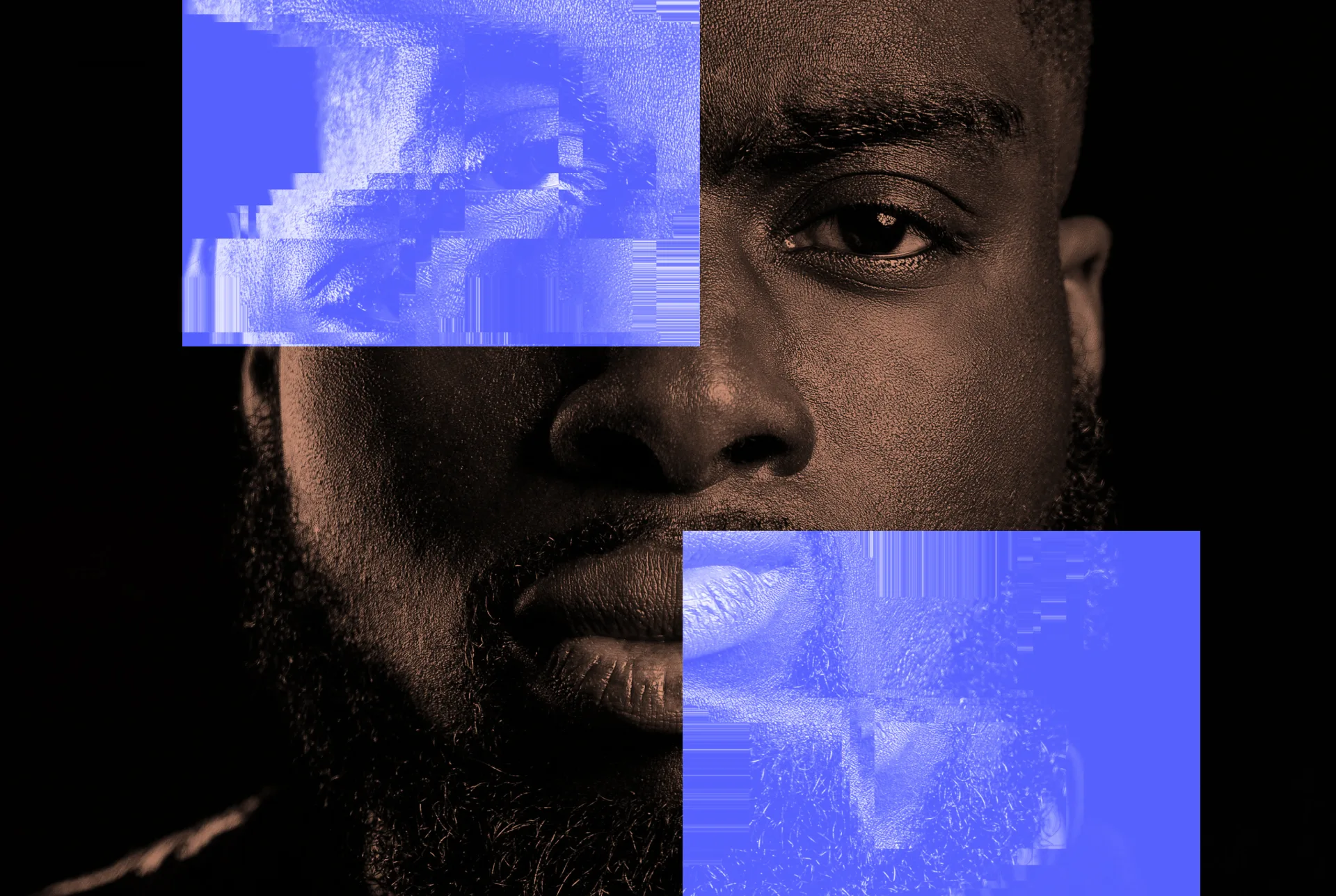

Surveillance technology that uses algorithmic tools can weaponize information about a person’s identity, behavior, and more against them. (Image: Danny Lines/Unsplash)

Harmful, unregulated surveillance and investigative technologies can increase the risk of wrongful conviction and strip away the presumption of innocence.

Surveillance technologies that use algorithmic tools, such as facial recognition technology, frequently run the risk of misinterpreting personal information or using inaccurate information from databases that misleads investigations. These tools often cast a wide net, pulling innocent people into a broken system that enables wrongful conviction. To make matters worse, there is little transparency into the internal workings of these tools, and how they collect, use, and store data.

Many technologies already in use have been deployed before being fully tested and validated, and these, too, are unregulated and have little oversight. In addition to perpetuating racial bias, these technologies often store or exploit personal data, leaving vulnerable communities, especially those that have been historically criminalized, exposed to data harms. More often than not, these tools also enable tunnel vision among law enforcement, encouraging investigators to hone in on a specific individual or individuals as people of interest even in the presence of convincing exculpatory evidence.

As the use of surveillance and investigative technologies continues to proliferate, we are working to understand their social implications and advocating for more transparency, oversight, and regulation to protect innocent people and the public. In doing so, we are collaborating with partners, including the White House Office of Science and Technology Policy, to gather the data we need to implement the necessary reforms.

Surveillance technology that uses algorithmic tools can weaponize information about a person’s identity, behavior, and more against them. (Image: Danny Lines/Unsplash)